Designing mRNA Vaccines with a Large Language Model

mRNA design space is pretty big, and LLMs could help find the optimum vaccine sequence.

It’s not an exaggeration to say that working mRNA COVID vaccines are one of the biggest lucky breaks in the history of science. When the coronavirus pandemic hit, there was no working mRNA vaccine for anything, anywhere. mRNA vaccines had been “promising” for a long time. In 2018, a group of vaccine biologists (including future Nobel laureate Drew Weissman) wrote a review of the field in which they noted that some mRNA vaccines in development “have demonstrated encouraging results in both animal models and humans.” That’s not the description you expect of a technology that would, a mere three years later, turn the tide against a world-wide pandemic. There weren’t a lot published of human studies of mRNA vaccines, and none of them was anything more than “encouraging.” Back in March 2020, I wrote that “because [mRNA vaccine] technology is still so new, there is likely a long road ahead before we see a safe, effective mRNA vaccine brought to bear on a pandemic outbreak. However, we could get lucky.”

We did indeed get lucky, but it may be that mRNA vaccines will become even more consequential. The technology is so versatile, with very strong advantages in design and manufacturing, that we might realize long hoped-for cancer vaccines and cures of autoimmune diseases. To realize those hopes with mRNA technologies, there are several outstanding challenges. One is to discover the right biological targets. mRNA vaccines work by encoding a protein that is specific to the immune response you are trying to trigger. Once inside your cells, the vaccine mRNA serves as a template for synthesis of the protein antigen. In the case of SARS-CoV2, nearly two decades of work after the 2002 SARS outbreak meant that by 2020, vaccine developers knew which antigen to use, the coronavirus spike protein. For other mRNA applications, identifying an effective antigen is an ongoing research task.

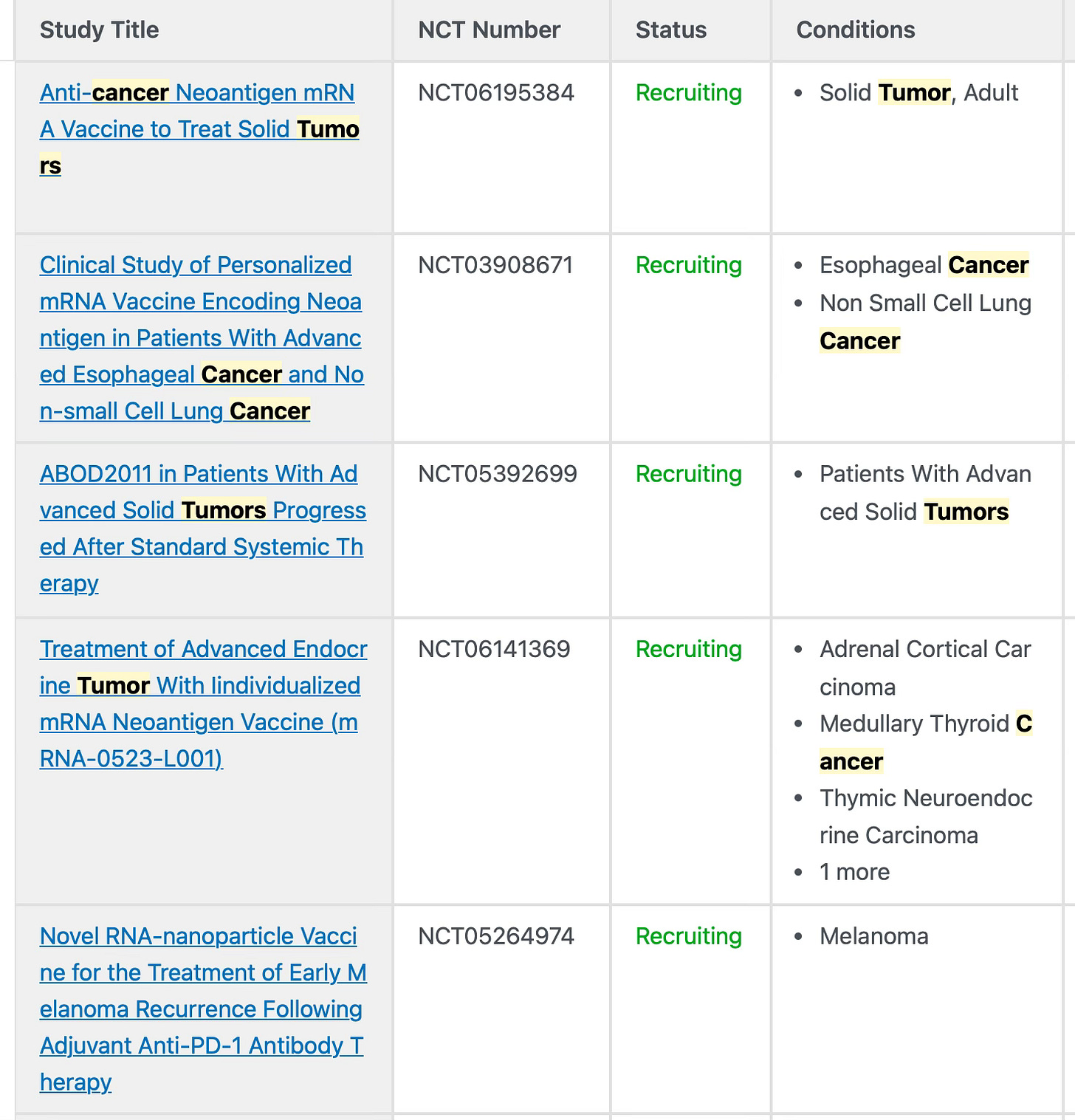

A few of the ongoing mRNA cancer vaccine trials registered at clinicaltrialvs.gov.

There is another crucial element of mRNA vaccine design, however, even when you have a good antigen. To design an effective mRNA vaccine with minimal side effects, you want to maximize expression of the protein antigen, but minimize the vaccine dose. In practice, this means coming up with an mRNA sequence that degrades slowly in vivo and is optimized for high protein production. The sequence design problem isn’t trivial, because there are many factors in the cell that affect mRNA stability and the efficiency by which it is translated into protein. But there are also many degrees of freedom here: an under-appreciated fact about proteins, is that any particular protein can be encoded by many possible mRNA. A quick glance at the genetic code reminds you that for every amino acid (except methionine), there are 2-6 mRNA triplet codons. A rough estimate is that for a protein of N amino acids, there are 3^N mRNA sequences that could encode it. For a 100 amino acid protein, that’s 5 x 10^47 possible sequences.

mRNA design virtually never takes advantage of that huge sequence space. Sequences typically are codon-optimized, meaning, you pick the codons preferred by whatever species in which the mRNA will be used. But many other factors affect mRNA stability and expression, particularly the loops and other folded structures that RNAs adopt. If your goal is to design a stable, translationally efficient mRNA, how do you do it?

This is a great task for an LLM. Scientists at Sanofi have a new paper out in Genome Research describing CodonBERT, a LLM that predicts the expression and stability of mRNAs. (BERT stands for Bidirectional Encoder Representations from Transformers and is a type of language model.) The model was pre-trained on 10 million mRNA sequences from mammals, bacteria, and human viruses. Pre-training a model like this involves masking out portions of an mRNA sequence and asking the model to fill in the masked portion. One of the critical features of CodonBERT is that codons were used as tokens in the model, so that the model represents optimal codon sequences, rather than just representing RNA nucleotide sequence. Pre-trained CodonBERT learned to identify different codons and the surrounding sequence contexts in which they occur. After pre-training, the researchers trained CodonBERT to predict the stability or translation efficiency of mRNAs, using different datasets. Being part of Sanofi, this team had access to a reasonably sized mRNA flu vaccine dataset, in addition to other non-vaccine mRNA datasets.

CodonBERT generally did better than other models at most prediction tasks, although it was only second-best at predicting mRNA stability. Crucially, CodonBERT was at predicting protein expression from the real mRNA flu vaccine dataset. All in all, these results are, well, promising. Much of the real promise, as the authors note, comes from the fact that LLMs can be used as generative models. So rather than just predict how well a human-designed sequence will do, CodonBERT can generate promising sequences from the enormous space of possible sequences.

For all of the things we hope (or fear) that AI will do, biological sequence design may be one of the most useful and consequential. We’re barely four years out from the first successful mRNA vaccines, and have a lot of unrealized potential ahead of us.